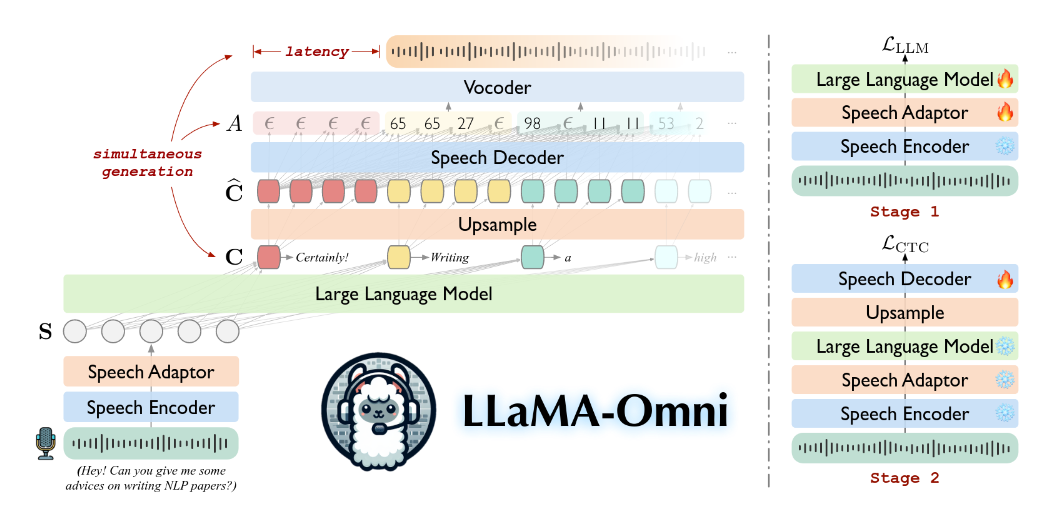

Research works based on LLaMA 3 (Dubey et al., 2024) have been popping up since its release in July. I have been focused on multimodal papers based on LLaMA 3 for my project. One of the primary paper I am focused on right now is the LLaMA-Omni (Fang et al., 2024). It adds the speech modality on top of the text already in Llama-3.1-8B Instruct model. Their work fills the void of GPT-4o’s (OpenAI et al., 2024) capability of end-to-end speech interaction in the sphere of open-source LLMs. In the simplest term, they wanted the model to accept speech and generate speech as output. The authors uses a novel technique to achieve this goal. At first, the speech \(X^s\) passes through a frozen speech encoder, in this case, Whisper V3 (Radford et al., 2023). Whisper takes 30s frames of log-melspectrogram and outputs a hidden representation \(\vec{H} = [\vec{h_1}, … \vec{h_N}]\). These \(\vec{H}\) are downsampled by concatenating every \(k\) column vectors which gives \(\vec{H}'\) with length \(\frac{N}{k}\) instead of \(N\). Finally, this downsampled represenation goes through a speech adaptor (a typical feed forward neural netowork) that basically maps these to the LLM. The text and speech instructions are combined for the LLM, where the text is fixed for all samples and only the speech varies. You can see the template below.

You are a helpful language and speech assistant. You are able to understand the speech content that the user provides, and assist the user with a variety of tasks using natural language.

<speech>

Please answer the questions in the user’s input speech.

The <speech> is the \(\vec{H}'\). The LLM auto-regressively generates the output text as all LLaMA models do and trained with cross-entropy. To generate the speech directly, the output hidden state for the LLM are fed to a speech decoder as each tokens are predicted continuously. Note that even though output hidden states are fed through the speech decoder sequentially, one token after another, there is no hidden state that are being shared, making this a non-autoregressive technique. This makes generating the speech almost as fast as the text generation, differentiating this approach from a cascading technique. The speech decoder produces speech units that are basically scalers in \([1, K]\) range which a vocoder uses to produce audio. During infernece, the system waits for a few speech units and then generates a partial audio from them, thus, producing a streaming audio.

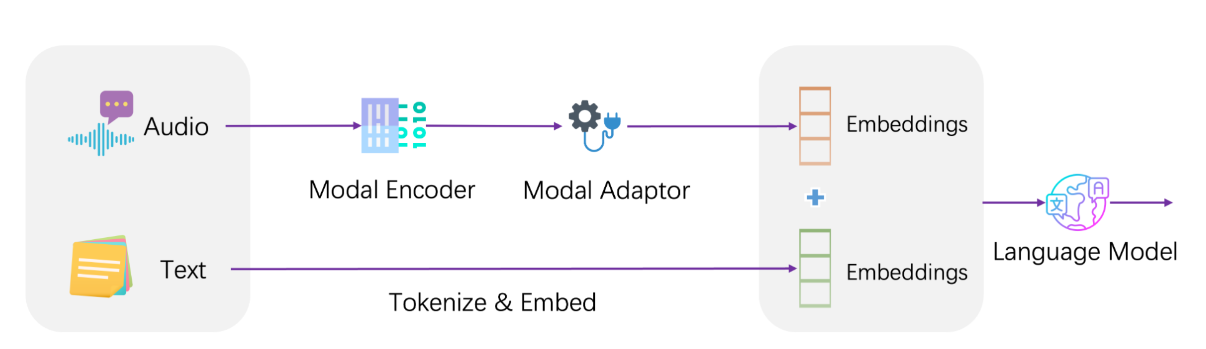

LLASM (Shu et al., 2023) is another model take understands speech + text to produce text only output. The core of this paper evolves around aligning speech embedding and text embedding together, which it does by going through a separate pre-training phase for a speech adaptor. The Whisper (Radford et al., 2023) encodes the audio to produce speech embeddings and how the text embeddings are produced not mentioned in the paper. But that seems like a trivial point in 2024.

Duirng the pre-training, the encoder and the LLM is frozen. The adaptor is trained with an ASR task where the instruction is a text, for example, “Transcribe the following speech into text”. There are many similar instructions in Chinese and English. The adaptor takes audio embeddings from Whisper. They are fed to the adaptor together in an interleaved manner as the LLaMA-Omni paper. This technique of interleaving is getting quite popular. The LLM and the adaptor is trained again the next phase: cross-modal instruction fine-tuning, which is basically multi-task training with cross-entropy. One of the primary achievement of this paper is creating the cross-modal instruction fine-tuning dataset.

Similarities

I am noticing that recent works are moving away from the cascading nature of transcribing the speech with an ASR first and also applying TTS for producing audio. Interleaving embeddings are the next hot-topic in this field.

References

2024

-

The Llama 3 Herd of Models

Abhimanyu Dubey, Abhinav Jauhri, Abhinav Pandey, and 532 more authors

Aug 2024

arXiv:2407.21783

Modern artificial intelligence (AI) systems are powered by foundation models. This paper presents a new set of foundation models, called Llama 3. It is a herd of language models that natively support multilinguality, coding, reasoning, and tool usage. Our largest model is a dense Transformer with 405B parameters and a context window of up to 128K tokens. This paper presents an extensive empirical evaluation of Llama 3. We find that Llama 3 delivers comparable quality to leading language models such as GPT-4 on a plethora of tasks. We publicly release Llama 3, including pre-trained and post-trained versions of the 405B parameter language model and our Llama Guard 3 model for input and output safety. The paper also presents the results of experiments in which we integrate image, video, and speech capabilities into Llama 3 via a compositional approach. We observe this approach performs competitively with the state-of-the-art on image, video, and speech recognition tasks. The resulting models are not yet being broadly released as they are still under development.

-

LLaMA-Omni: Seamless Speech Interaction with Large Language Models

Qingkai Fang, Shoutao Guo, Yan Zhou, and 3 more authors

Sep 2024

arXiv:2409.06666

-

GPT-4o System Card

OpenAI, Aaron Hurst, Adam Lerer, and 416 more authors

Oct 2024

arXiv:2410.21276

GPT-4o is an autoregressive omni model that accepts as input any combination of text, audio, image, and video, and generates any combination of text, audio, and image outputs. It’s trained end-to-end across text, vision, and audio, meaning all inputs and outputs are processed by the same neural network. GPT-4o can respond to audio inputs in as little as 232 milliseconds, with an average of 320 milliseconds, which is similar to human response time in conversation. It matches GPT-4 Turbo performance on text in English and code, with significant improvement on text in non-English languages, while also being much faster and 50}% cheaper in the API. GPT-4o is especially better at vision and audio understanding compared to existing models. In line with our commitment to building AI safely and consistent with our voluntary commitments to the White House, we are sharing the GPT-4o System Card, which includes our Preparedness Framework evaluations. In this System Card, we provide a detailed look at GPT-4o’s capabilities, limitations, and safety evaluations across multiple categories, focusing on speech-to-speech while also evaluating text and image capabilities, and measures we’ve implemented to ensure the model is safe and aligned. We also include third-party assessments on dangerous capabilities, as well as discussion of potential societal impacts of GPT-4o’s text and vision capabilities.

2023

-

Robust speech recognition via large-scale weak supervision

Alec Radford, Jong Wook Kim, Tao Xu, and 3 more authors

In Proceedings of the 40th International Conference on Machine Learning, Honolulu, Hawaii, USA, Oct 2023

We study the capabilities of speech processing systems trained simply to predict large amounts of transcripts of audio on the internet. When scaled to 680,000 hours of multilingual and multitask supervision, the resulting models generalize well to standard benchmarks and are often competitive with prior fully supervised results without the need for any dataset specific fine-tuning. When compared to humans, the models approach their accuracy and robustness. We are releasing models and inference code to serve as a foundation for further work on robust speech processing.

-

LLaSM: Large Language and Speech Model

Yu Shu, Siwei Dong, Guangyao Chen, and 5 more authors

Sep 2023

arXiv:2308.15930

Multi-modal large language models have garnered significant interest recently. Though, most of the works focus on vision-language multi-modal models providing strong capabilities in following vision-and-language instructions. However, we claim that speech is also an important modality through which humans interact with the world. Hence, it is crucial for a general-purpose assistant to be able to follow multi-modal speech-and-language instructions. In this work, we propose Large Language and Speech Model (LLaSM). LLaSM is an end-to-end trained large multi-modal speech-language model with cross-modal conversational abilities, capable of following speech-and-language instructions. Our early experiments show that LLaSM demonstrates a more convenient and natural way for humans to interact with artificial intelligence. Specifically, we also release a large Speech Instruction Following dataset LLaSM-Audio-Instructions. Code and demo are available at https://github.com/LinkSoul-AI/LLaSM and https://huggingface.co/spaces/LinkSoul/LLaSM. The LLaSM-Audio-Instructions dataset is available at https://huggingface.co/datasets/LinkSoul/LLaSM-Audio-Instructions.